AI Adoption Is Stalling. More Training Won't Fix It.

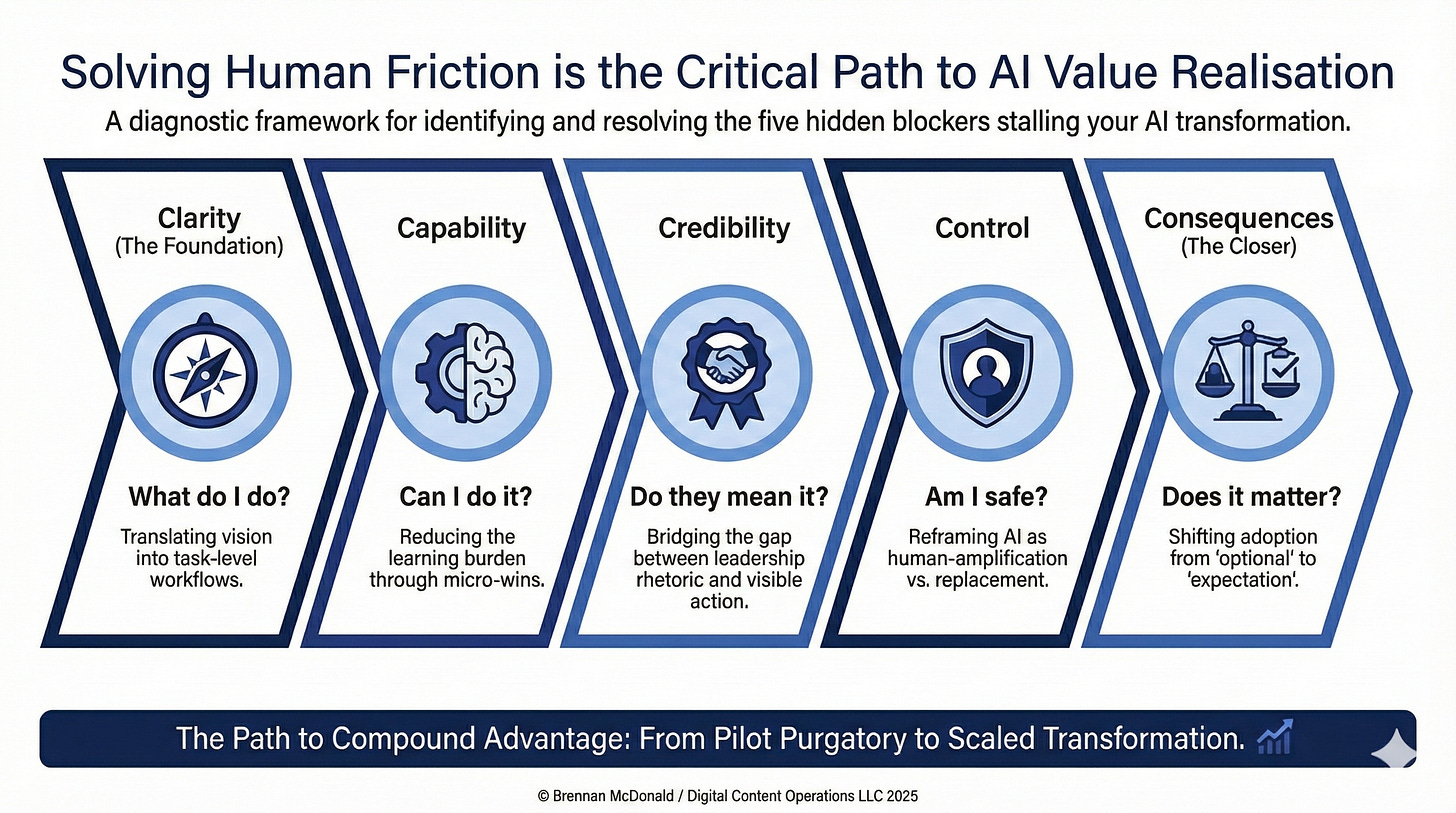

The 5C Adoption Friction Model: A diagnostic framework for identifying what's actually blocking your AI transformation

That AI pilot from six months ago? Still a pilot.

You’ve invested in the tools. Run the training sessions. Sent the announcement emails, updated the intranet, scheduled the follow-up workshops. Nothing has changed.

The licences sit underused. The people who attended training have quietly gone back to their old ways. The promising early results haven’t spread beyond the original team.

Meanwhile, the tools keep getting better. What was possible six months ago is already outdated. The gap between organisations that have figured this out and those still stuck is widening faster than most leaders realise.

If this sounds familiar, you’re not alone. Many organisations are in exactly this position: significant investment, genuine enthusiasm from leadership, and adoption that stays stubbornly low.

The instinct is to double down. More training. Clearer documentation. Another town hall. The problem is rarely the technology, it’s just people being people inside the systems they live and work inside.

Why AI Adoption Stalls

When adoption stalls, leaders reach for the same playbook. More training. Better documentation. A dedicated Slack channel. Another email from the CEO.

These aren’t unreasonable responses. But they share a common assumption: that the problem is knowledge. That people aren’t adopting AI because they don’t know how, and once they learn, adoption will follow.

Sometimes true. Often not.

The Pattern in Practice

Here is how this typically plays out.

A mid-sized firm rolls out an AI writing assistant to its client-facing teams. Leadership announces the initiative, purchases licences, runs training workshops. Attendance is strong. Feedback is positive. Three months later, usage data shows fewer than 40% of employees have used the tool more than twice.

The leadership team assumes the training didn’t land. They bring in an external trainer, create tutorial videos, build a prompt library. Usage ticks up briefly, then flatlines.

The problem was never capability. Most employees understood how to use the tool. The problem was clarity. Nobody told them which tasks should go through the AI assistant. Client emails? Internal memos? First drafts only? Final documents? People were left to figure it out themselves. And when adoption is optional and ambiguous, most people take the familiar path.

Six months and significant budget later, the firm is no closer to adoption. They were solving the wrong problem.

Here’s the real challenge for leaders: resistance has multiple root causes. A team that lacks skills looks identical to a team that doesn’t trust leadership. Someone who’s confused about expectations behaves the same as someone quietly protecting their turf. From the outside, all you see is non-adoption. The cause stays hidden.

This is why so many AI initiatives get stuck. Leaders diagnose a capability gap, prescribe training, then grow frustrated when nothing shifts. Wrong problem.

I keep seeing the same five friction points. Each one needs a different response. Until you know which one you’re facing, you’re probably wasting time and money on interventions that won’t work.

The 5C Adoption Friction Model

I call it the 5C Adoption Friction Model.

The five friction points: Clarity, Capability, Credibility, Control, and Consequences.

Each one is a question people in your organisation are silently asking. They won’t raise these questions in meetings. They might not even articulate them to themselves. But until these questions are answered, they won’t change how they work.

The questions:

Capability: “Do I know how to do this?”

Credibility: “Does leadership actually believe in this?”

Control: “Will this make me less valuable?”

Consequences: “What happens if I just... don’t?”

Miss any one, and adoption stalls. Address the wrong one, and your interventions go nowhere.

The Five Friction Points

Clarity

“What exactly am I supposed to do differently?”

You’ll recognise this one: vague mandates, unclear ownership. Leaders say things like “we need to use AI more” or “find ways to integrate AI into your work.” People nod in meetings and do nothing afterward. Nobody’s sure who’s responsible for what, or how success gets measured.

Leaders assume the vision is obvious. It never is. People need specifics: which tasks, which tools, which workflows, by when.

The fix is translation. “Use AI more” has to become “Draft client emails in Claude before sending” or “Run your first analysis through the tool before building the spreadsheet manually.”

Capability

“Do I know how to do this?”

This is the one most leaders recognise. It shows up as avoidance: people steer clear of the tools entirely, or complete the training and never apply it. You hear “I don’t have time to learn this” or “It’s too complicated.” Underneath, there’s often a fear of looking incompetent.

This happens when people are asked to adopt new tools while still delivering their existing workload. Learning feels like extra work on top of the day job, not a replacement for parts of it.

The fix is reducing the learning burden. One workflow, one tool, one small win. Make the first step so simple it feels almost trivial. Momentum builds from there.

Credibility

“Does leadership actually believe in this?”

This one shows up as cynicism. Wait-and-see behaviour. The AI initiative gets dismissed as “flavour of the month.” People have seen programmes come and go. They’re rationally conserving energy for things that will actually last. You’ll notice senior leaders talking about AI in town halls but never visibly using it themselves.

Trust is built through demonstrated commitment, not announcements. If the executive team treats AI as something for others to adopt, the organisation reads that signal clearly.

The fix requires leaders to use the tools themselves, talk openly about their own learning curve, and consistently signal that this direction is permanent.

Control

“Will this make me less valuable or take away my autonomy?”

This one hides. You see it in middle management resistance, turf protection, information hoarding. People agree enthusiastically in meetings, then quietly block progress. Concerns about job security may be spoken. More often, they’re not.

AI changes power dynamics. People who’ve built careers on expertise, gatekeeping, or being the one who knows how things work can see that value shifting. The threat feels existential, even if it isn’t.

The fix is reframing AI as something that amplifies human judgment rather than replacing it. Give people a genuine role in shaping how AI gets used in their domain. Ask your most experienced people to stress-test the AI outputs, define quality standards, train others on where the tool falls short. When people feel ownership, resistance softens.

Consequences

“What happens if I just... don’t?”

This one appears when adoption is encouraged but not measured. No accountability for ignoring the initiative. People who resist face no downside. People who adopt early get no recognition. The rational calculation becomes obvious: why change when the path of least resistance is staying the same?

Leaders often prefer buy-in over compliance. They want people to adopt AI because they see the value, not because they’re told to. Admirable instinct. But without any consequences, you get optional change. Optional change doesn’t scale.

The fix is building adoption into performance conversations. Recognise early adopters publicly. Make non-adoption visible. Not heavy-handed enforcement — just making clear that this is an expectation, not a suggestion.

The Main Thing

Most leaders default to solving for Capability. When adoption stalls, they run more training. When training fails, they run better training. It feels productive. It’s measurable. And it’s usually beside the point.

If people don’t believe the initiative will last, no training changes their behaviour. If nothing happens when they ignore it, the rational choice is to wait it out. If they feel their value is under threat, they’ll resist in ways that never surface in feedback forms.

Training solves for skill. It doesn’t solve for trust, or fear, or incentives, or clarity.

A reasonable challenge: these five friction points aren’t perfectly distinct. They overlap. Most organisations face several at once. And if you’ve studied change management, you’ll recognise familiar ideas here.

Fair criticism. This framework isn’t entirely new. What it offers is a diagnostic lens. A way to pause before prescribing. A set of questions that force you to look at what’s actually happening, instead of reaching for the same intervention every time.

The question isn’t “which training programme should we run?” It’s “which friction point is doing the most damage right now?”

The difficulty is that diagnosing your own organisation is hard. You’re too close. The politics are invisible precisely because you’re inside them. And every month spent solving the wrong problem is a month of budget burned, goodwill spent, and change fatigue deepened. The next initiative gets harder, not easier.

Here’s what makes this moment different: the tools are improving fast. AI capabilities that seemed leading-edge twelve months ago are now table stakes. The cost of delay isn’t static. Organisations that solve their adoption friction now will compound their advantage. Those that stay stuck will find the gap harder to close.

One more thing worth calling out - the same AI tools reshaping the world can also accelerate the transformation itself. Diagnosing friction points, generating tailored interventions, testing approaches, iterating quickly. This isn’t a twelve-month change programme. Organisations that use AI to accelerate AI adoption will move at a pace traditional approaches can’t match.

Diagnose Your Organisation

Before you close this tab, take sixty seconds.

Clarity: Can your team describe, in specific terms, what they’re supposed to do differently tomorrow? Not the vision. The actual tasks.

Capability: Have you given people time and space to learn, or is AI adoption something they’re expected to do on top of their existing workload?

Credibility: Are senior leaders visibly using AI themselves, or just talking about it in town halls?

Control: Have you addressed the unspoken concern that AI might make certain roles less valuable? Or is that conversation being avoided?

Consequences: Is there any real downside to ignoring the AI initiative entirely? Would anyone actually notice?

If you answered “no” to any of these, you’ve found a friction point worth examining.

If you answered “no” to several, here’s what I’ve learned: leaders almost always assume Capability is the primary blocker. It rarely is. More often, the friction sits with Clarity or Consequences. People aren’t struggling because they can’t learn the tools. They’re holding back because they don’t know exactly what’s expected, or because nothing happens if they ignore it.

Start with Clarity. If people can’t articulate what they’re supposed to do differently, nothing else matters yet. Then check Consequences. If the answer to “what happens if I don’t?” is “nothing,” you’ve found why your training isn’t translating into behaviour change.

Every month addressing the wrong friction point is a month of budget burned, credibility spent, and patience depleted. The window for getting this right is narrower than it looks.

Go Deeper

Some readers will take this framework and run the diagnostic themselves. That’s the point.

If you want to apply it systematically, I’ve created the 5C Adoption Friction Diagnostic. It walks you through each friction point with specific diagnostic questions, helps you identify where to focus first.

Work With Me

If you want help applying this to your organisation, I run a 90-minute diagnostic called the AI Change Leadership Intensive.

We apply the 5C framework to your specific situation. By the end, you’ll know which friction point is primary, the two or three moves that would actually shift adoption, and whether you can execute it yourself or need support.

Guarantee: If you don’t leave with at least one actionable insight, I’ll refund you in full.

AI adoption is not a technology problem. It is a people and diagnosis problem.

The tools are ready. The question is whether you are solving for the right friction.

Best regards,

Brennan

Great framework. Thing with AI is that in most organizations, training is very theoretical, while for everyone new to AI, it's very difficult to understand the applicability and use cases in your direct work. So unless there's tons of examples + creating a way for everyone to share their own, things tend to stall

Did you just reinvent ADKAR with different letters? 🙃