How to Unblock Your AI Roadmap in One Conversation

A diagnostic framework for identifying what's actually stopping AI adoption in your business

The demo worked. Then nothing happened.

You know the scene. You run a pilot for a new AI tool. The demo is impressive, the technology works, and everyone in the room nods along, agreeing that this is clearly the future. Then everything stops. The project dies in a budget meeting. An email arrives explaining the team is “too busy.” The licence cost is deemed slightly too high.

If you treat this as a logic problem, it makes no sense. The technology works. The business case is sound. The failure to adopt defies reason.

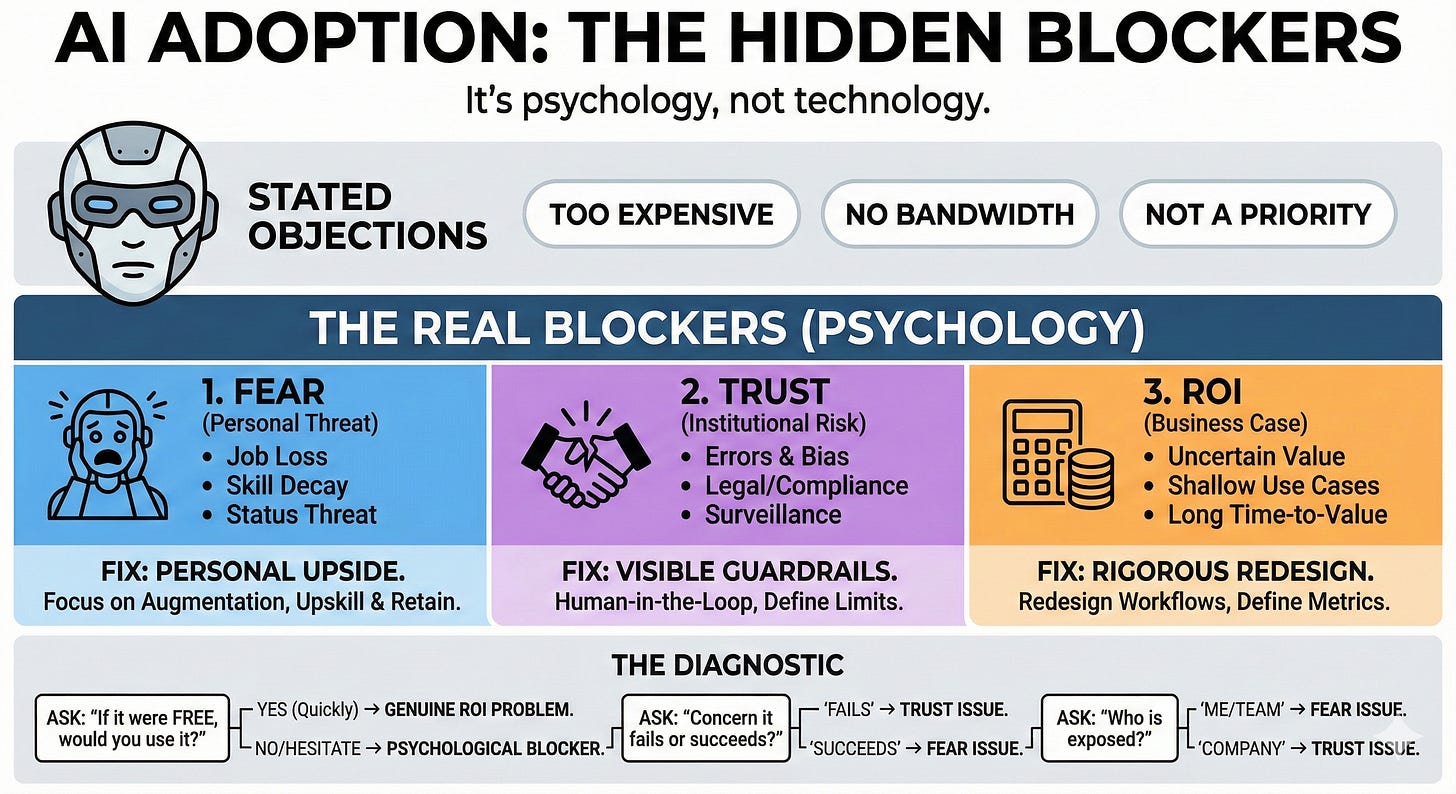

But that is precisely the mistake: treating this as a logic problem when it is actually a psychology problem. The objections you hear (cost, timing, bandwidth) are rarely the root cause. They are stories we tell after the fact, covering up deeper and more uncomfortable AI project blockers.

The data confirms the pattern. A 2025 McKinsey survey reports that 88% of organisations say they use AI in at least one function. Yet only 39% report any measurable profit impact, and most of those estimate the effect at less than 5% of earnings. Companies are not failing because the technology is bad. They are failing because they are misreading the human resistance.

To unblock your roadmap, you need to stop arguing about price and start identifying the three actual blockers: Fear, Trust, and ROI. But first, you need to understand why people cannot simply tell you which one is stopping them.

The objection is real. The reason isn’t.

Psychologists call it confabulation: we act on instinct, then build a logical story to explain ourselves. The story feels true, but it is made up after the fact. Classic research by Nisbett and Wilson showed that people have remarkably little insight into their own decisions. We watch our behaviour and guess at a plausible cause, much like a bystander would.

This is not dishonesty. It is how human thinking works. When a manager tells you an AI project is “too expensive,” they are not lying. They genuinely believe cost is the issue. But the belief was built after an emotional reaction they cannot easily name.

The mechanism underneath is loss aversion. Decades of behavioural economics research show that losses feel roughly twice as painful as equivalent gains feel good. The threat of losing control, status, or relevance outweighs any promise of efficiency. A 15-year operations lead watching a junior analyst demo a tool that does her job in seconds is not calculating ROI. She is calculating survival. But she cannot say that in a meeting, so she says the tool is too expensive. That sounds careful. That sounds responsible.

If you spend three weeks reworking the budget to address her concern, you will not have solved the problem. The anxiety will still be there, and it will find a new sensible-sounding objection to wear.

So if the stated reason is unreliable, what is actually going on? Three things.

Three fears wearing the same mask

Almost every stalled AI project traces back to one of three root blockers. They often overlap, but one is usually dominant. Your job is to figure out which.

Fear: the personal calculation

Fear is the question of “what happens to me?” It covers job loss, skill decay, status threat, and workload anxiety. A 2025 Harvard Youth Poll found that 59% of young people see AI as a direct threat to their job prospects. In Australia, a Dayforce survey revealed that nearly half of workers fear AI-driven job cuts, yet fewer than one in five have received any AI training.

Fear is made worse by gaps in how organisations prepare people. Executives receive training and express confidence in their company’s AI strategy. Frontline workers receive little support and stay anxious. The trust gap runs top to bottom.

If your team believes you are building a machine to replace them, they will block the project. They will use budget, timing, or “workflow concerns” as the weapon, because those objections are safe to voice. The fear underneath is not.

Trust: the institutional risk

Trust is the question of “will this system, and the people running it, burn me?” It works on two levels: technical and political.

Technical trust concerns accuracy, explainability, bias, and compliance. McKinsey found that over half of organisations using AI have had at least one negative outcome, with errors being the most common. When someone says “Legal will never approve this,” they are often expressing doubt that the AI will behave reliably, or that the organisation will back them if it fails.

Political trust concerns how leadership will use the technology. Will it become a surveillance tool? Will it be used to justify job cuts? Will it create metrics that punish rather than support? These concerns are rarely spoken aloud, but they shape behaviour powerfully.

ROI: the genuine (or convenient) business case

Sometimes the objection is valid. Harvard Business Review reports that nearly half of executives rate their AI returns as below expectations. Pilots multiply, but real value stays shallow and uneven.

The challenge is telling the difference between a genuinely weak use case and ROI as a cover story. “Too expensive” is the safest objection in business. No one gets fired for sounding careful. When Fear or Trust is the true blocker, ROI is often where they hide.

The same words hide different motives depending on who is speaking. An executive citing “uncertain ROI” may mean it literally; push them for specifics on profit impact or strategic fit. A middle manager claiming “the team is at capacity” is more likely expressing Fear; ask what they think will happen to their role if the project succeeds. A frontline worker complaining that “the tools are clunky” may be worried about surveillance or job loss; ask what a good AI-assisted day would look like for them.

You cannot fix Fear with a spreadsheet. You cannot fix Trust with a better demo. You need to know which one you are facing before you respond. Which brings us to the diagnostic.

One question finds the truth

Next time you hit a wall, resist the urge to refine your pitch. Instead, run a simple diagnostic to find the real blocker.

Step 1: Take money off the table

Ask the stakeholder directly:

“If this tool were free tomorrow, with zero licence cost and zero setup effort, would you roll it out immediately?”

If they say yes without hesitation, you have a genuine budget or priority problem. That is solvable with a calculator and a stronger business case.

If they hesitate, or say no, money is a decoy. The resistance lives elsewhere. Move to step two.

Step 2: Separate “won’t work” from “will work too well”

Ask:

“Is your bigger concern that this won’t work well enough, or that it will work and change how people operate here?”

This question forces a choice that cleanly separates the two emotional blockers.

If they focus on reliability, accuracy, compliance risk, or data quality, you are dealing with a Trust issue. Their concern is that the system will fail or expose the organisation to harm.

If they focus on workflow disruption, team pushback, morale, or internal politics, you are dealing with a Fear issue. Their concern is that the system will succeed and change their world in ways they cannot control.

Step 3: Identify who bears the risk

Ask:

“If this went wrong, who would be most exposed?”

If the answer points to “the company,” “the brand,” “our regulators,” or “our customers,” the blocker is Trust. The perceived risk is to the institution.

If the answer points to “me,” “my team,” or “this function,” the blocker is Fear. The perceived risk is personal.

Step 4: Validate genuine ROI concerns

If step one pointed to a real budget issue, do not stop there. Vague ROI objections often fall apart when forced into specifics. Ask:

“What would have to be true twelve months from now for you to call this a success?”

Map their answer to concrete metrics: profit impact, cycle time, error rates, customer satisfaction, risk reduction. Work out whether their concern is about the size of the prize, the time to value, or confidence in execution. Each requires a different response.

The diagnostic is not a trick. It is a way of helping people put words to concerns they may not have language for. Once you know the real blocker, you can actually address it.

Different blocker, different fix

The same slide deck will not work for Fear, Trust, and ROI. Diagnosis must come before prescription.

If the blocker is Fear

Stop showing efficiency projections that look like headcount cuts. Every percentage point of “productivity gain” reads as a percentage point of “people we no longer need.”

Instead, focus on the personal upside. Show how the AI removes admin tasks they already dislike. Show a career path where AI skills increase their value and options. Protect status publicly: create visible roles where people are recognised for working with the technology, not being replaced by it. Commit to retraining with specific numbers, including training coverage, internal mobility figures, and transition support.

Fear is reduced when people see a future that includes them.

If the blocker is Trust

Stop showing aspirational demos that promise magic. Trust is built by admitting limits, not by overselling.

Run a session where you actively stress-test the model and show where it fails. Document the guardrails: human-in-the-loop policies, accuracy monitoring, incident procedures, explainability rules. Be explicit about red lines. State clearly that the AI will not be used for hidden performance tracking, that no employment decisions will be made without human review, that specific use cases are off the table.

Trust is built by visible constraints, not reassuring words.

If the blocker is ROI

Tighten the problem statement. McKinsey’s research shows that the small percentage of organisations achieving real AI returns share a common trait: they redesign workflows around the technology rather than bolting it onto existing processes. Efficiency gains from small tool additions are modest. Gains from rethinking the work itself are substantial.

Help stakeholders define success in terms they care about. Link to proven value patterns. Set up leading indicators so you can show progress before the next budget cycle, and lagging metrics so you can prove impact after it.

ROI concerns, when genuine, are the most straightforward to address. They require rigour, not psychology.

The face they make when they answer

We are past the initial excitement of AI. The demos have been seen. The pilots have been run. Now comes the harder work of organisational change, and that work is fundamentally about people, not technology.

The companies that succeed in the next three years will not be those with the most advanced models. Models are rapidly becoming commodities. The winners will be the organisations that develop an honest understanding of their own psychology: the fears that stay unspoken, the trust gaps that block adoption, and the sensible-sounding objections that mask deeper resistance.

Next time someone tells you an AI project is too expensive, do not argue the maths. Ask them if they would use it if it were free, and watch their face when they answer.

That is where the real work begins.

When you need help to get unblocked

If you recognised several of these patterns playing out in your organisation, you are not alone. Many teams are navigating three or four simultaneously, which is why generic training often fails.

The AI Change Leadership Intensive is a structured diagnostic session where we map exactly which patterns are active in your specific context. We identify the few interventions most likely to shift adoption in the next 90 days. You leave with a written AI Change Snapshot you can take straight to your leadership team.

You can book your paid call now - I enjoy working with select clients and look forward to helping you unblock your AI transformation journey.

Awesome! Unlocking this changes everything

spotlight:

This resonates deeply. I’ve watched more AI initiatives stall not because the tech failed, but because the human system around it wasn’t ready. Framing this as a psychology problem instead of a logic problem is exactly right.

The Fear, Trust, and ROI breakdown is especially sharp. I’ve seen the same objections surface with different masks depending on who’s speaking and what they feel they’re allowed to say out loud.

This is the kind of piece that helps leaders stop “improving the deck” and start improving the conversation. Thoughtful, grounded, and very timely.