Control: The Fear Nobody Will Admit

The friction point that hides beneath the surface

You have done everything “right”.

You secured the budget. You rolled out the licenses. You ran training sessions that got positive feedback. You communicated the vision clearly and repeatedly. You have been patient.

And still, someone on your team is not adopting. They have the skills. They understood the training. They could use AI tomorrow if they chose to.

They are choosing not to. And you are running out of explanations.

I want to offer one more possibility. Not because you missed something obvious, but because this one is genuinely hard to see from where you sit.

What do they lose if this succeeds?

The Silent Question

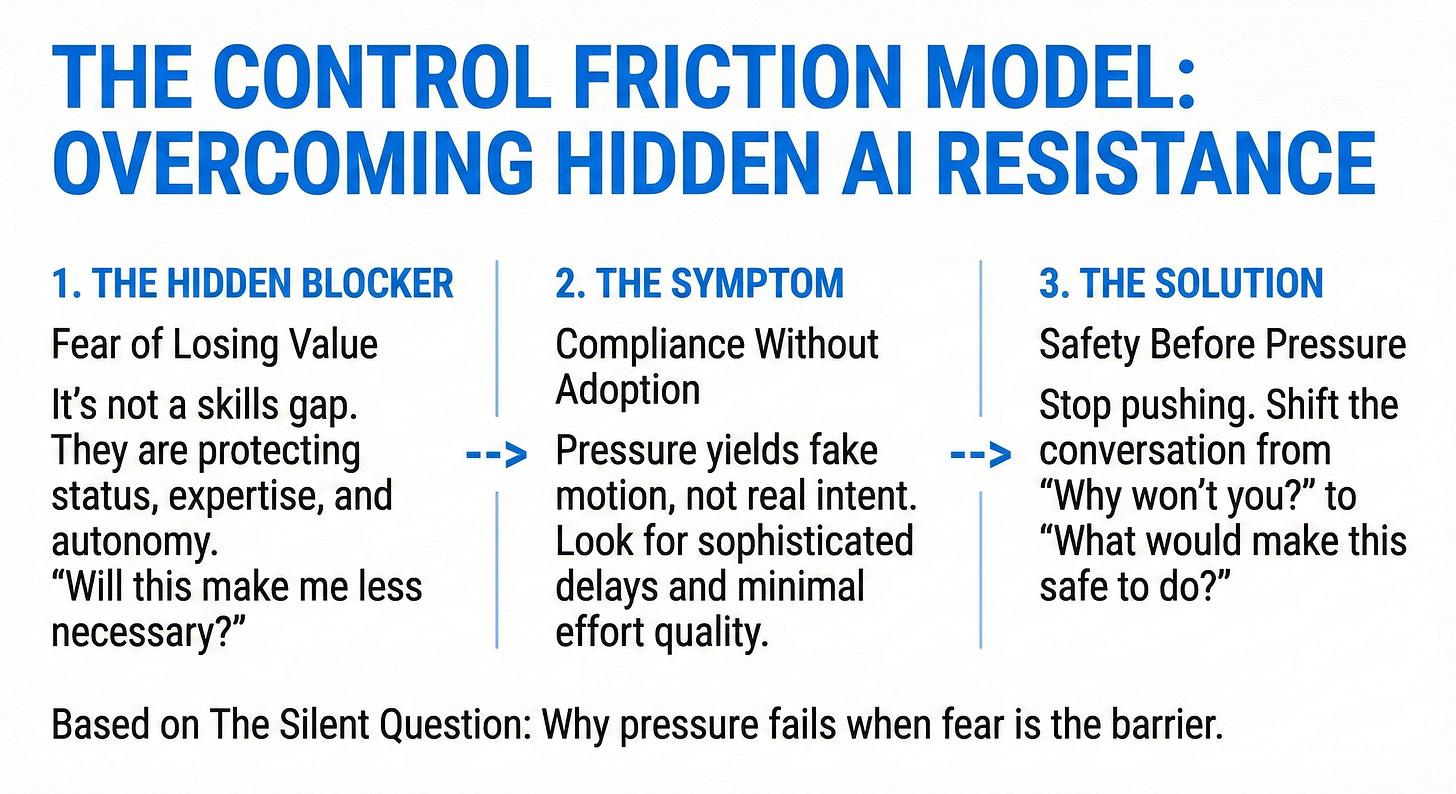

This is the fourth friction point in the 5C Adoption Friction Model.

The first three friction points address gaps in direction, skills, and leadership credibility. Control is different. The person is not confused, struggling, or waiting to see if this initiative will last.

They are protecting something.

“Will this make me less valuable?”

Most people running an AI adoption initiative have not asked this question about their team. It is not a comfortable question. But in my experience, it explains more stalled adoption than any amount of training or communication failures.

The person who is not adopting may have understood the situation more clearly than anyone. They saw what AI threatens. Not their job, necessarily. Something closer to the bone.

Their status: the position they hold in the team’s informal hierarchy.

Their expertise: the knowledge that makes them the person others come to.

Their autonomy: control over how their work gets done.

Their identity: the story they tell themselves about why they matter here.

When you ask someone to adopt AI, you may be asking them to participate in making themselves less necessary. That is not a motivation problem. That is a safety problem. And safety problems do not respond to pressure.

Why Pressure Fails

The instinct to increase pressure is understandable. You have deadlines. You have stakeholders. You have someone who appears to be blocking progress for reasons they will not articulate.

So you push. More communication. Clearer expectations. Perhaps a direct conversation about performance.

Eventually, the person complies. They start using the tools. The dashboard shows adoption. You move on.

But here is the pattern I saw repeatedly in my corporate career: compliance without adoption. The person goes through the motions. They do exactly enough to satisfy the requirement. They withhold the thing that would make a new change actually work in their domain: their expertise, their engagement, their willingness to make it succeed.

You achieved compliance. The resistance did not resolve. It learned to hide.

If you want to know whether adoption is real, look at quality of output, not volume of activity. Dashboards measure motion. They do not measure intent.

Pressure has other costs. The person learns that raising concerns leads to more pressure, not support. They stop raising concerns. They do not stop having them. The next change initiative you lead will be harder, because they remember how this one went.

The hardest cost to see: what you stopped hearing when people decided it was not safe to speak. The concerns that would have prevented problems. The expertise that would have made this work. The engagement that would have spread to others.

How to Recognise It

Control friction disguises itself. That is part of what makes it difficult.

Someone asks sophisticated questions about edge cases, governance, implementation details. The questions sound engaged. They function as delays. Each answer generates two more questions. Progress stalls without anyone saying no.

Or: someone uses the tools exactly as instructed. The outputs appear. The activity metrics look fine. But the work is not actually better. They are meeting the letter of the requirement while withholding the expertise that would make it succeed.

Or: someone is publicly enthusiastic and privately inactive. They attend the sessions. They nod in meetings. They say the right things. Their actual work remains unchanged.

These are not people who cannot adopt. These are people choosing a strategic level of engagement. Just enough to avoid pressure. Not enough to threaten their position.

Three Questions

First: Who on your team would become less essential if AI adoption succeeds? Not less employed, necessarily. Less essential. The person others come to with questions. The person who holds institutional knowledge. Who loses status if this works?

Second: Have you heard concerns about what AI means for roles, even indirectly? Jokes count: “I guess we’ll all be replaced soon.” Questions asked to peers rather than leadership count: “What happens to people like us?” Fear rarely surfaces directly. It comes out sideways.

Third: Is anyone doing careful minimal compliance? Meeting the requirement but withholding the discretionary effort? This looks like adoption on a dashboard. It feels like resistance in person.

If you said yes to two or three of these, Control is likely your primary blocker.

A caveat worth naming: minimal compliance has other causes. Bandwidth. Tool fatigue. Unclear incentives. Scepticism about whether AI actually improves the work. The questions above filter specifically for fear about value and status. If you are not hearing those signals, the issue may lie elsewhere.

There is also a related friction point that looks similar: Consequences (”What happens if I just... don’t?”). Control is “I am afraid to do this.” Consequences is “I do not have to do this.” Both produce non-adoption. They need different fixes. More on Consequences next week.

What Works Instead

The fix is not more communication. Not more training. Not more pressure.

The fix is safety before adoption. And safety is not a feeling. It is a set of organisational conditions.

Who has authority to shape how AI is used in their domain, rather than having it imposed? Who gets credit when adoption succeeds, and who gets blamed if it fails? What happens to someone’s role definition when the thing they were known for becomes automated?

These are design questions, not motivation questions.

In the organisations where I have seen Control friction resolved, the shift usually starts with a different kind of conversation. Not “why are you not adopting?” but “what would need to be true for this to feel safe?” The first conversation triggers defensiveness. The second opens something.

There is a specific way to set up that conversation. The framing matters. The questions matter. The order matters. But the principle underneath is simple: make it safer to speak than to hide.

One Thing to Do This Week

Ask one person on your team: “What do you think people are most worried about with this AI initiative?”

You are asking about “people,” not about them. This gives permission to voice concerns without admitting fear. Most people will tell you what they are worried about by telling you what “people” are worried about.

Listen without defending. Do not explain why the concern is unfounded. Do not reassure them that their job is safe. Just listen. Write down what you hear.

You are not trying to resolve the fear in one conversation. You are trying to learn what is actually happening beneath the compliance.

The Playbook

If this article described something you recognise, the playbook gives you a way to act on it.

Paid subscribers get the Stakeholder Fear/Motivator Map and detailed playbook: a structured way to identify who on your team is experiencing Control friction, what specifically each person fears losing, and what would make adoption feel safe to them. They also have access to the full archive and all of the playbooks to apply to their businesses and teams.

You walk away knowing exactly who to talk to, what is driving their resistance, and how to open the conversation without triggering the defensiveness that shuts it down.

Next week: Consequences. “What happens if I just... don’t?”

For hands-on help identifying which friction point is primary in your organisation, book the AI Change Leadership Intensive: https://calendly.com/brennan-mcdonald/ai-change-leadership-intensive

Keep reading with a 7-day free trial

Subscribe to Getting AI To Work by Brennan McDonald to keep reading this post and get 7 days of free access to the full post archives.