Why Every Business Has 5 Years to Achieve 90% Operating Model Compression

Forget 10% efficiency gains. The AI era demands 90% operating model compression.

Hi there,

This week I’m going to write about AI-first thinking, and introduce the concept of Operating Model Compression.

What is operating model compression? It’s a framework that argues massive compression of people, process and technology will become the default-setting for AI-first companies over the next 5 years.

Forget about 10% FTE reductions as a playbook - this will be the era of 90%+ headcount reductions and customers telling their technology vendor they need to reduce next month’s invoice by 90% or they’ll rebuild their product internally.

Operating model compression flips the “10x developer” idea on its head - if you increase productivity 10x you only need 10% of the original resource footprint. That’s a 90% compression ratio.

But first, this week’s AI announcements

This isn’t doom-and-gloom - I’ve had a great week, and am having a lot of fun spending a few hours a day test-driving the new AI frontier. There were so many cool AI announcements this week I’d like to mention briefly before continuing to share my thinking on operating model compression.

The ChatGPT Codex coding agent. I tested it out and it’s able to contribute meaningfully to a side project I’m working on at small defined tasks. Tools like this will drive the autonomous agent workflows that enable compression.

The Google IO announcements: they’re delivering great innovation, but they really are under-rated in AI-hype mindshare. Try everything out on AI Studio to see how good their models are getting. The rapid experimentation you can do in AI studio is a major enabler for rethinking operating models at pace.

The Claude “4” series model announcements. Having used lots of Claude Sonnet 3.7 for vibe coding (rapid iterative development with AI tools), I’m looking forward to burning a lot of API tokens on Claude Sonnet 4.0 - maybe this will be an opportunity to try out Claude Code next month - this is the one “Max” plan I haven’t tried yet.

Right - let’s get into exploring AI-first thinking and operating model compression.

What does AI-first thinking look like?

I think AI-first is a term I use all the time. But what does it look like in practice?Kerman Kohli had a fantastic post the other day outlining a cool idea to ideate on your business idea with AI, but then put your business planning and strategy documentation as context in a Git repository.

Once you’ve done this, all of your vibe coding and product development would happen informed by the context of the strategic planning you did at the outset. Moving away from the Google Docs / Notion / Microsoft Office world and towards literally-everything becoming version-controlled via Git as he argues will make for much faster iteration and much easier integration with agentic AI tools as they continue to evolve.

One key realisation I’ve had through all this AI transition is you need a 100% AI-native culture. If you don’t have complete buy-in and have people that explore this stuff in their free time you’re going to struggle to create new powerful systems like this. The struggle is real and I’ve found when conducting hiring interviews this is a new criteria that is remarkably hard to hire for too. Using code editors, learning git etc can feel deeply uncomfortable and many may still think “this AI thing is a fad” or “why would i use something that gets things wrong 60% of the time”. These people will resist these newer working methodologies. - Kerman Kohli

I found this observation very interesting - there is definitely an experimentation and curiousity thing with AI tools. There is a lot of “career risk” at big companies, and even the most capable at these firms are unlikely to be curious even to pull off massive transformation with AI.

In heavily regulated industries like healthcare and banking - the obvious move with coding-first monorepo operating model buildout is transforming all of the regulation you have to comply with from policies in PDF format to JSON rules everything must follow before a build run of your product passes to production.

AI is already generating high percentages of code at Google, Claude Code is solving bugs and building features at Anthropic, and OpenAI was using Codex internally ahead of the Codex release to build and bug fix Codex.

Klarna recently announced it was hiring some more customer service folks after going from 700 to 70 just last year (90% compression!) - they’ll still employ many fewer than previously to deal with “more complex” queries their tooling can’t deal with effectively yet. How long will that last?

When I see in the Australian press that Big 4 bank Westpac is looking at redundancies of 1,500 people, I think that’s going to become an indicator that these large firms still aren’t taking AI tooling seriously. Westpac has almost 36,000 employees as of its most recent ASX filing - their effort is just a 4% efficiency - that won’t cut it as meaningful for much longer.

More firms are announcing that AI tools are writing a high percentage of their code - 20-30% at Microsoft, and 30% at Google according to a recent earnings call. Startup founders are already vibe coding small apps and making money.

When AI agents get rolled out more widely, the compression available in the size of software development functions alone will be enormous. When you add the removal of redundant operations functions, this is where a lot of cost reduction can occur - and fast.

Revenue per employee at big firms: $500k. Revenue per employee at AI-first startups: $5m. We will see $10m and $20m and even $1 billion revenue per employee companies within 5 years.

What will executives do when faced with the option of doubling or tripling revenue per employee over a few years even proceeding at a considered and risk-averse pace?

Already lean startups have been sold for billions - although to be fair with efficient engineering practices this was also a thing in the pre-AI era when WhatsApp was sold to Facebook with 50 employees.

The human cost is brutal, but hyper-capitalism demands human sacrifice for CEOs to have bullet points to speak to on quarterly earnings calls - so expect to see numbers like this increase and become more “eye opening” over time.

Tens of thousands of highly paid software developers in the USA have already lost work and many are struggling to find something to replace it. Agentic coding tools will push many to hopefully do their own thing and vibe code to some form of sustainable economic model.

As a naturally curious person, now that I have access to Deep Research, every time something comes up I fire off a Deep Research request. Just last year, I would have gone on an internet-browsing-Google-search-filetype:pdf-Wikipedia-rabbit-hole. This new world is much more efficient.

So when it comes to AI first thinking:

You have an initial idea

You brainstorm back-and-forth with your preferred model

You check-and-challenge the thinking further

You might fire off Deep Research to get reports to act as a fact base

You rework the business context and strategy further

You put this context in Markdown files in a Git repository

This context now powers your vibe coding as you build an MVP

I think one of the challenges that AI first thinking faces is human preferences. Having delivered a lot of business and technical change, and having completed change management training, I know all about resistance to change from both a lived experience and academic theoretical perspective.

For most people, change of any kind that they have no control over is literally terrifying. It can actually induce physical and mental reactions similar to literally life threatening scenarios. It’s important to remember everyone is wired differently, it took many years of not realising how very few people “get it” when it comes to technology to change my approach on some projects around communication.

Personally, I am a techno-optimist - the thing I am enjoying most about this current moment in time is the freedom to experiment with all of these tools as I see fit with no corporate guardrails.

I am though quite pragmatic about what these sorts of changes will do to societal cohesion as hyper-capitalism leads firms to make ever-more-optimising decisions about how they run their business in the AI era.

And that’s lead me to think about my own experience in project and change management and how the AI era is going to adjust how businesses design and move towards their new operating models, compelled by hyper-competition from 2 people they made redundant last year taking that industry-specific tacit knowledge and vibe coding a feasible competitor in a few weeks.

The increasing capability of AI tools is highlighting how much leverage there is from using agents and AI workflows - that’s where the thinking on compression comes from.

Why are current operating models doomed?

People:

Headcount grows autonomously because of incentives faced by management

Human biases generate friction and redundant overhead that reduces efficiency

“True” headcount is staff + plus at external vendors + staff at customer side

Process:

Processes start simple but grow as edge cases are added and shortcuts taken

Internal fixed costs of change mean that manual exception processing and firefighting reign

Limitations and restrictions of internal rules and external regulation drive “waste” in processes

Technology:

Business context is in people’s heads, some in emails, some in chat, some in code

A labyrinthine sprawl of technology keeps the lights on and sometimes delivers delight to customers

Enormous costs are incurred in maintenance and new change is extremely risky because of unknown unknowns

The traditional approach to target operating model transformation is use of consultants and contractors, many slide decks and Excel models are involved, and you may or may not end up spending anywhere from millions of dollars to hundreds of millions of dollars.

The return on investment is most often generated in reduced headcount through redundancy, outsourcing or offshoring. Sometimes a new technology platform will reduce total cost of ownership, but sometimes this is just an accounting delusion.

This has been business-as-usual for the past few decades. The market discipline of quarterly earnings forces everyone to play this game. Sometimes there are initiatives that producing sustainable and enduring advantages, but most gains through operating model transformation are competed away within a few years.

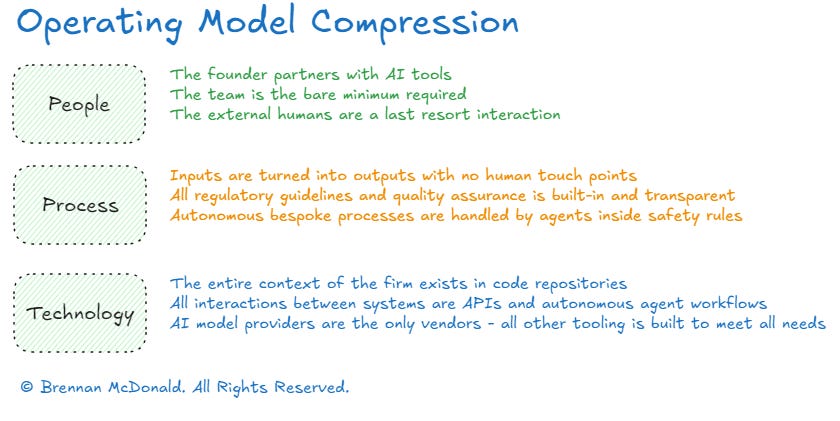

What is Operating Model Compression?

There’s a disconnect between people who understand cutting-edge AI and the daily operations of typical businesses. The guy running a carpentry shop in Des Moines isn’t current on the latest AI developments, and AI experts probably aren’t interested in the day-to-day improvements you can make across different fields. - Dwarkesh Patel

My new framework for AI-first thinking in business is called operating model compression. The target operating model is a favourite phrase of consultants and C-level executives alike. Operating model compression scales the ambition to match the technology potential of AI tooling.

The idea is that you have a way of doing things as a business today - it’s not good enough for whatever reason (most often legacy technology, process inefficiency or too many bums on seats that need to go by the end of the quarter) - and you want to streamline, optimise or enhance the entire way you do things for customers or just a specific function.

In the AI-first era, operating model compression takes the traditional methods of doing this and pours jet fuel on the back-and-forth partnering with AI process to accelerate the returns for shareholders and drastically lower the costs of either replacing a business entirely with a new operating model or radically slashing cost-to-serve.

People:

The founder partners with AI tools

The team is the bare minimum required

The external humans are a last resort interaction

Process:

Inputs are turned into outputs with no human touch points

All regulatory guidelines and quality assurance is built-in and transparent

Autonomous bespoke processes are handled by agents inside safety rules

Technology

The entire context of the firm exists in code repositories

All interactions between systems are APIs and autonomous agent workflows

AI model providers are the only vendors - all other tooling is built to meet all needs

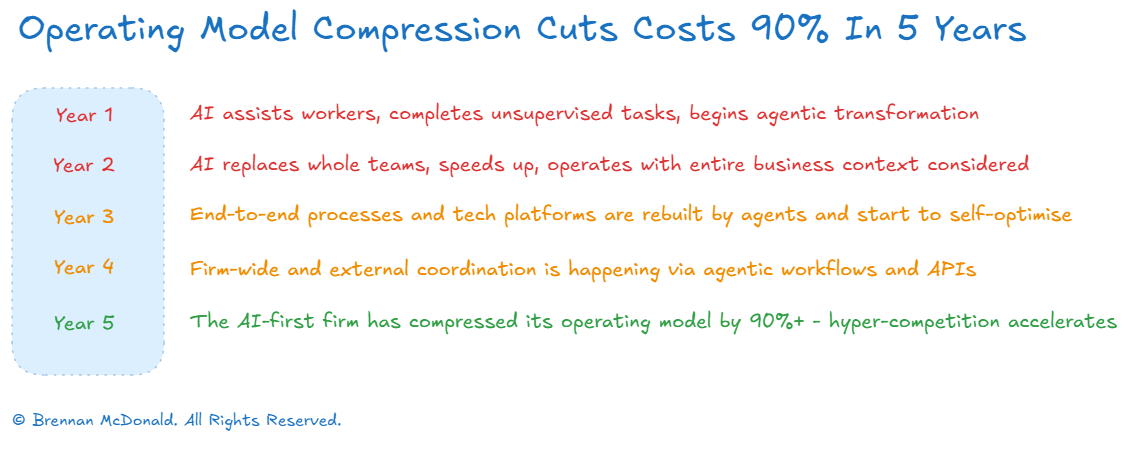

What scale of compression might happen?

I think Operating Model Compression is a good framework to start thinking about the job loss impacts of AI technology. The scale of compression I’m thinking of, to frame the following section, goes like this:

Instead of having multiple C-level executives, you have a founder and a handful of highly expert domain experts

All of the functions you would have hired an expert to build out and hire more staff have their own agents - operating inside the code-like business context

All of the agents can operate inside safety guardrails and meet regulatory guidelines and the preferences of the founder

External regulators have their own agents - monitoring real-time or as agreed - read-only access to almost everything or just agreed regulatory reporting data sets - it’s the only way they can handle the automated enforcement agent bots

Auditors have their own agents - monitoring real-time every transaction - this agent is the compromise the accountant lobby group made with the government to keep paying retired partner’s pensions

You have an optimisation and “clean up” crew of highly skilled engineers whose focus is to “help the agents sing”, they don’t do much except in crises - kind of like security guards to check the automated factory

One-by-one, every single external vendor, consultant, expert, or service whose value can be recreated cheaper inside the firm is cloned by an internal agent

Yes - this is at least 5 years away - but if you think 10 steps ahead instead of just one, I’d be really interested in why people think this isn’t where we are headed? I’m interested in hearing the “why nots” from people - I know there are many limitations to existing AI technology today - but at the current rate of progress and capability improvement every few months, what am I missing or getting wrong here?

There are so many aspects of how a business runs today that are nowhere near as complicated as solving a frontier math problem or creating new types of materials or even coding a complex software backend service. I don’t think regulatory barriers are as big a deal as some critics are making out - why? - because legislative and regulatory guidance and case law is perfectly suited for LLM optimisation.

When linked to agentic workflows, and operating an AI-first business through code-everything mentality, regulatory compliance is just another part of the automated software build process managed through continuous integration.

I think 90%+ reductions in spend across people, process and technology will be achieved by AI-first firms inside 5 years. This is going to have massive societal implications. There will be some new jobs created - but nowhere near as many as some think. And hyper-competition will quicken because of these market forces.

The Operating Model Compression Playbook

Operating Model Compression is already happening. Deep Research writing a briefing paper. Windsurf vibe coding a new feature. Claude Code fixing bugs that never would have been fixed previously. Startup founders building entire Minimum Viable Products and charging for them without raising $1. Graduate and entry-level roles slashed in half.

If you’re thinking about how to apply this to your own business, here’s a high-level playbook. It’s how I’d start from scratch to compress any operating model.

You could implement this plan in 24 hours if you’re an AI-first startup or on the frontier with technology, but let’s stretch it out for a few years to account for the friction and complexity of most businesses.

Month 1: Deploy AI tools universally across the business and let people cook

Month 2: Migrate all business context to Git repositories - promote the people who came up with all the good ideas - get rid of their managers

Months 3-6: Design compressed operating model and send the implementation work packages to AI agents (20% reduction achieved)

Month 6+: Keep deploying agents and replacing team-by-team every workflow involved in delivering value to your customers (30% reduction)

Year 1 - Year 5: Full Operating Model Compression continues product by product, process by process, platform by platform - your management layers become AI agents coordinating between themselves via API (90% reduction)

Operating model compression is already happening - it’s just nowhere near the mainstream consciousness yet. More workers use tools like ChatGPT as a sidekick and over the next year we’ll start hearing more stories of founders vibe coding to $100 million in ARR or massive redundancies at big firms who rebuilt entire functions and technology platforms.

The AI tools exist and get better every few weeks. The math is compelling. You have in the most optimistic scenario 5 years to compress your operating model 90% before a two-person AI-powered startup eats your lunch. The clock is ticking. What's your first move? Tell me what you’d compress first.

Best regards,

Brennan

This might be somewhat off topic, but do you really think the next five years will proceed as you predict if 90% of the workforce is rendered redundant? Are you not worried the pitchfork-wielding neo-Luddite mobs will be burning down data centres and attacking corporate HQs once the unemployment rate hits a mere 20-30 per cent? Might that not be a (predictable – as you flag but don't really explore) fly in the Operating Model Compression Playbook?

I like the new phrase. Thanks. I will use it.