Your IT Policy Is About to Become a Talent Problem

Most corporate workers can't access the tools where the larger productivity gains live

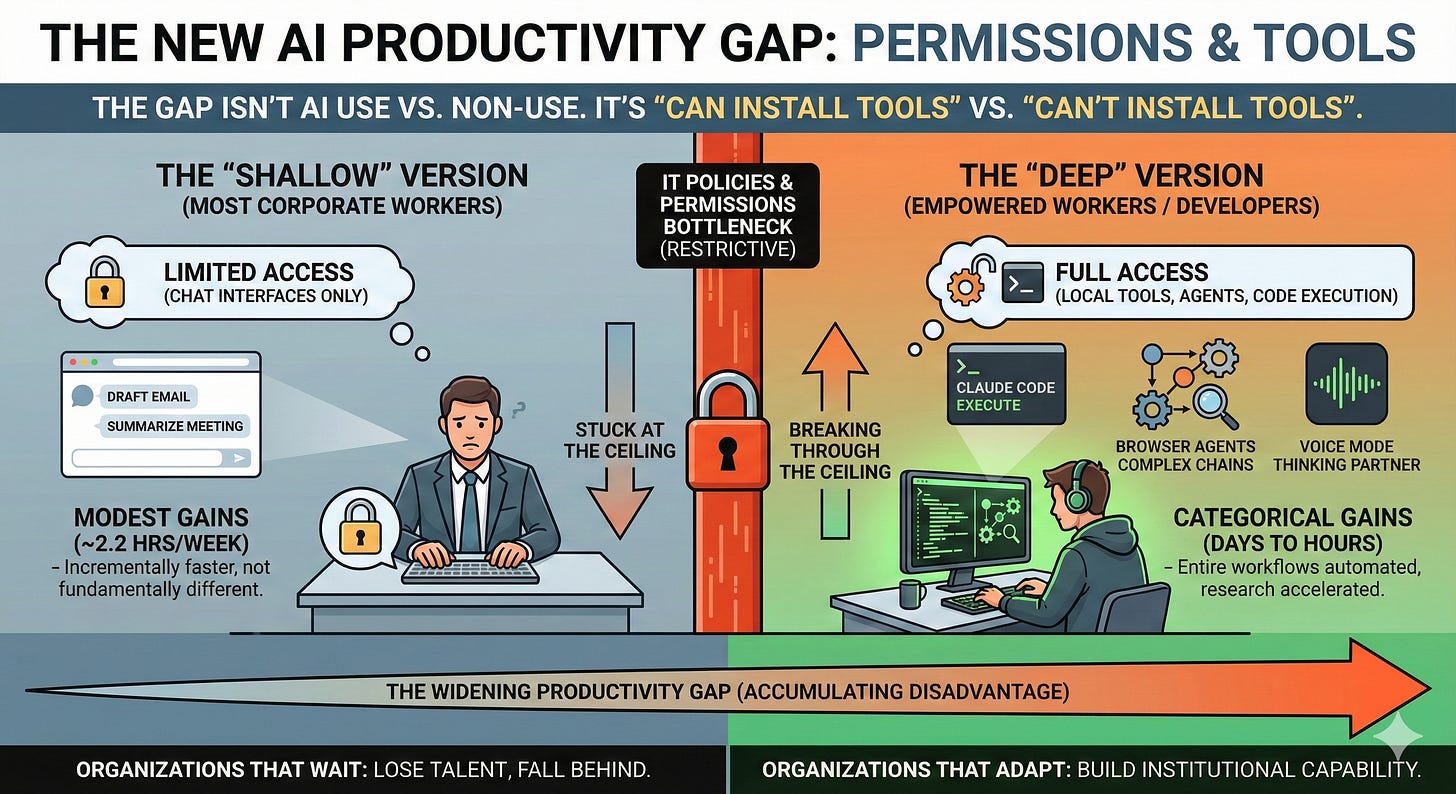

The gap isn’t between people who use AI and people who don’t. By now, most knowledge workers have typed something into ChatGPT. That’s not a competitive advantage anymore. That’s table stakes.

The gap is between people who can install tools on their machines and people who can’t.

And most corporate workers can’t.

The gains everyone talks about aren’t the real gains

You’ve read the articles. AI saves time on emails. AI summarises meetings. AI helps with first drafts.

All true. All modest.

The St. Louis Fed found the average time savings from generative AI is about 2.2 hours per week. Useful. But not the story people are telling at conferences.

The story changes when you talk to someone running Claude Code or ChatGPT Codex. In my experience, certain tasks that took most of a day or even several days now take an hour. Research that required pulling together information from dozens of sources happens in a single session. The difference isn’t incremental. It’s categorical.

The difference isn’t the model. It’s the mode.

Chat interfaces are helpful. Tools that can read files, search the web, execute code, and work through multi-step problems autonomously are a different category entirely.

Most corporate IT policies treat “AI” as one thing. They’ll approve a ChatGPT Enterprise licence while blocking employees from installing anything on the command line.

The tools doing the heavy lifting require installation. They require permissions most workers don’t have.

What I actually use

Let me be concrete about what’s working. Not theory. Practice.

Voice mode as a thinking partner. I don’t use AI primarily for writing. I use it for thinking. I talk through prioritisation decisions, ask where the gaps are in my reasoning, pressure-test a strategy. The voice interface changes the relationship. It’s a conversation, not a text box. The output isn’t a document. It’s clarity.

Claude Code for non-coding work. This surprises people. Claude Code is marketed as a developer tool. But it’s a research and analysis engine that happens to run in the terminal. I use it to pull together information across sources, process documents, build context on companies or topics I need to understand quickly. It’s not just writing code for me. It’s doing research I’d otherwise spend hours on.

Gems for repeatable workflows. I’ve built custom Gems in Gemini for tasks I do repeatedly. Same structure, same output format, every time. This isn’t prompting from scratch. It’s building systems. The investment is upfront. The payoff is ongoing.

Browser-based agents to see what’s coming. I’ve been testing ChatGPT’s Atlas browser. The execution is still rough. But you can see where this is heading. The future of knowledge work isn’t asking AI to help you search. It’s telling AI what you need and letting it go find it, synthesise it, and bring back what matters.

None of this is “use ChatGPT for your status updates.” That’s the shallow version. The deeper version requires tools most corporate workers outside of development teams aren’t allowed to touch.

The IT permissions problem is the actual bottleneck

Developers will laugh at this. They install whatever they want (depending on the company!).

But most corporate workers can’t install anything. They can’t run command line tools. They can’t add browser extensions without approval. They can’t connect external services to internal systems.

There are good reasons for this. Security. Compliance. Standardisation. The risks of uncontrolled software sprawl are real.

But the tension is obvious. The tools where the real productivity gains live are exactly the tools that require installation, local execution, and system access.

A browser-based chat window is safe. It’s also limited.

A tool that can read your files, search the web, execute code, and chain together complex tasks is powerful. It also needs permissions that most IT policies don’t grant.

The result is a widening gap. People with tool access are pulling ahead. People without it are stuck with the shallow version while reading articles about how much everything is supposed to be changing.

This won’t last

Right now, restrictive IT policies feel prudent. Control the tools, control the risk.

But competitive pressure has a way of forcing reconsideration.

When your competitor’s analyst can do in two hours what your analyst does in two days, the policy conversation changes. When candidates ask about AI tool access in interviews and walk away if the answer is wrong, the policy conversation changes. When entire teams leave for companies with better infrastructure, the policy conversation changes.

We’re not there yet. But we’re heading there.

McKinsey’s 2025 research found that 92% of companies plan to increase AI investment but only 1% consider themselves mature in deployment. That gap is partly about strategy and training. But it’s also about access. You can’t develop real skill with these tools if your people can’t use the ones that matter.

The organisations that figure this out first won’t just be more productive. They’ll attract and retain the people who know what’s possible.

The responsible version of expanded access

I’m not arguing for chaos. The “logic up, data stays down” principle still applies.

Use AI for reasoning, structure, and language. Don’t feed it client lists, unreleased financials, or personally identifiable information. If you can’t scrub it, don’t ship it.

But there’s a difference between sensible data hygiene and blanket tool prohibition.

The sensible approach: “Here’s what you can use, here’s what you can’t put into it, here’s how we monitor for compliance.”

The current approach at most companies: “We’ve approved this one chat interface. Everything else is blocked.”

One creates boundaries. The other creates a ceiling.

If you’re an individual contributor

You have two options.

Option one. Work within the constraints. Use whatever browser-based tools your company approves. Get the modest gains. Wait for IT policy to evolve.

Option two. Build skills on your own time. Learn the more powerful tools on personal projects. Understand what’s possible so you can advocate for better access internally. Be ready to move to an organisation that gets it.

I’m not telling you to violate your company’s policies. I am telling you that the people pulling ahead have access to tools you might not even know exist.

The 2.2 hours a week that everyone talks about? That’s the floor, not the ceiling. The ceiling is much higher. But you need different tools to reach it.

If you influence IT policy

The current equilibrium is unstable.

You can block these tools and maintain the security posture you have today. For now, that feels safe.

But the cost isn’t zero. The cost is paid in productivity gaps that widen every month. In talent that looks elsewhere. In competitive disadvantage that accumulates quietly until it’s obvious.

The question isn’t whether to allow more AI tools. It’s which tools, with which rules, for which use cases.

The companies that answer this well will build an advantage that grows over time. The ones that default to prohibition will wonder why their people seem slower than everyone else’s.

The uncomfortable truth about adoption

The AI productivity conversation has been domesticated. Use ChatGPT for emails. Summarise your meetings. Draft your first paragraphs.

That’s fine. It’s also the beginner version.

The advanced version involves tools that run locally, execute code, chain tasks together, and work through complex problems with minimal hand-holding. These require IT permissions most workers don’t have.

The gap between the beginner version and the advanced version is large and growing.

Most of the productivity stats being reported come from the beginner version. The advanced version is harder to measure because fewer people have access. But the anecdotal evidence from people who do is striking.

If you’re stuck on the beginner version, you’re not falling behind yet. But the distance is growing.

The window for business advantage

Eighteen months from now, these tools will be more capable and easier to deploy. The security and compliance tooling will mature. Early-adopter organisations will have developed playbooks for safe rollout.

The organisations that move first will have learned things. Their people will have built skills. Their workflows will have evolved.

The organisations that wait will be starting from scratch while their competitors are already running.

This isn’t about individual productivity hacks anymore. It’s about institutional capability.

The question for individuals is “am I building skills with the tools that matter?”

The question for organisations is “are we giving our people access to the tools where the real gains live?”

If the answer is no, that’s a problem. And it gets more expensive to solve the longer you wait.

When you need help to get unblocked

If you want to talk through challenges in getting AI transformation working in your business, I can help.

The AI Change Leadership Intensive is a structured diagnostic session where we map exactly which patterns are active in your specific context. We identify the few interventions most likely to shift adoption in the next 90 days. You leave with a written AI Change Snapshot you can take straight to your leadership team.

You can book your call now - I enjoy working with select clients and look forward to helping you unblock your AI transformation journey.

Regards,

Brennan

PS: If you haven’t subscribed to my AI Transformation YouTube channel yet, I’d really appreciate it. Here’s a video I recorded on getting better output from Gemini Deep Research:

Subscribed to your YouTube channel ;)

You're right about the IT lock being the bottleneck. Basic chat access is everywhere now, but the tools that change how you work require permissions most people don't have. That gap's getting wider.